Welcome to Arthur Shield!

The First Firewall for LLMs

Arthur Shield is now Arthur EngineCheck out the open source version of Arthur Engine here!

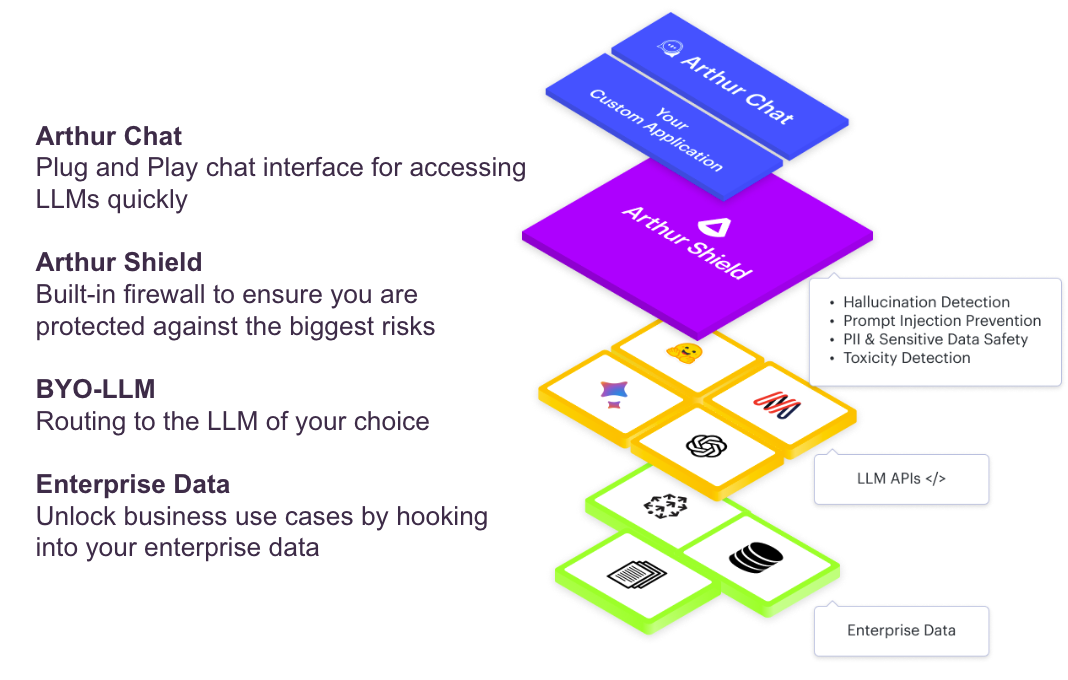

Do you want to deploy large language models without major risks or safety issues? Arthur Shield is the first firewall for LLMs, ensuring safe deployments for LLM-based applications across your organization.

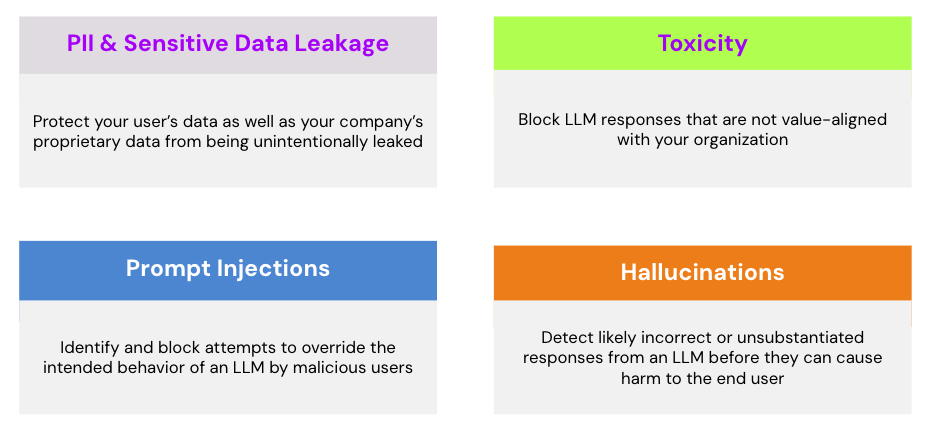

What does Arthur Shield ensure Safe Deployment Against?

Arthur Shield provides configurable rules for real-time detection of PII or Sensitive Data leakage, Hallucination, Prompt Injection attempts, Toxic language, and other quality metrics. By leveraging Arthur Shield, you can prevent these risks from causing bad user experience in production and negatively impacting your organization's reputation.

For more in-depth documentation on how Arthur Shield defines and detects each risk area, check out our Rules Overview Guide.

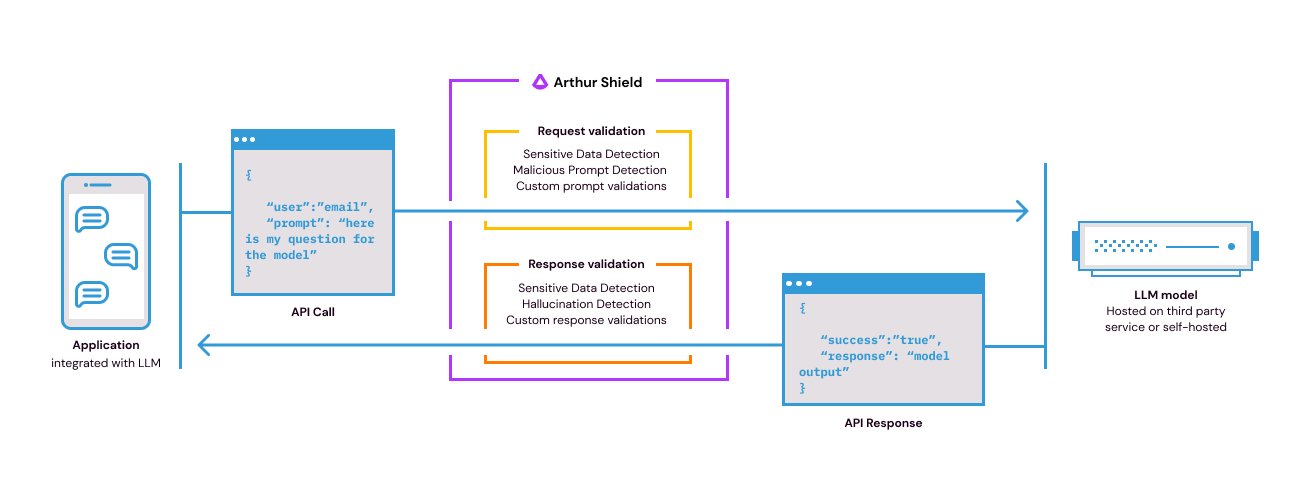

How does Arthur Shield integrate with my LLM application?

With an easy API-first design, teams can integrate Arthur Shield into their application within minutes. The Arthur Shield APIs can be called by your application before and after routing to a public or self-hosted LLM endpoint. The easiest way to think about using Shield is that it breaks your LLM application down into a simple four-step process:

For a step-by-step guide on integrating Arthur Shield into your existing LLM application, check out Arthur Shield Quickstart.

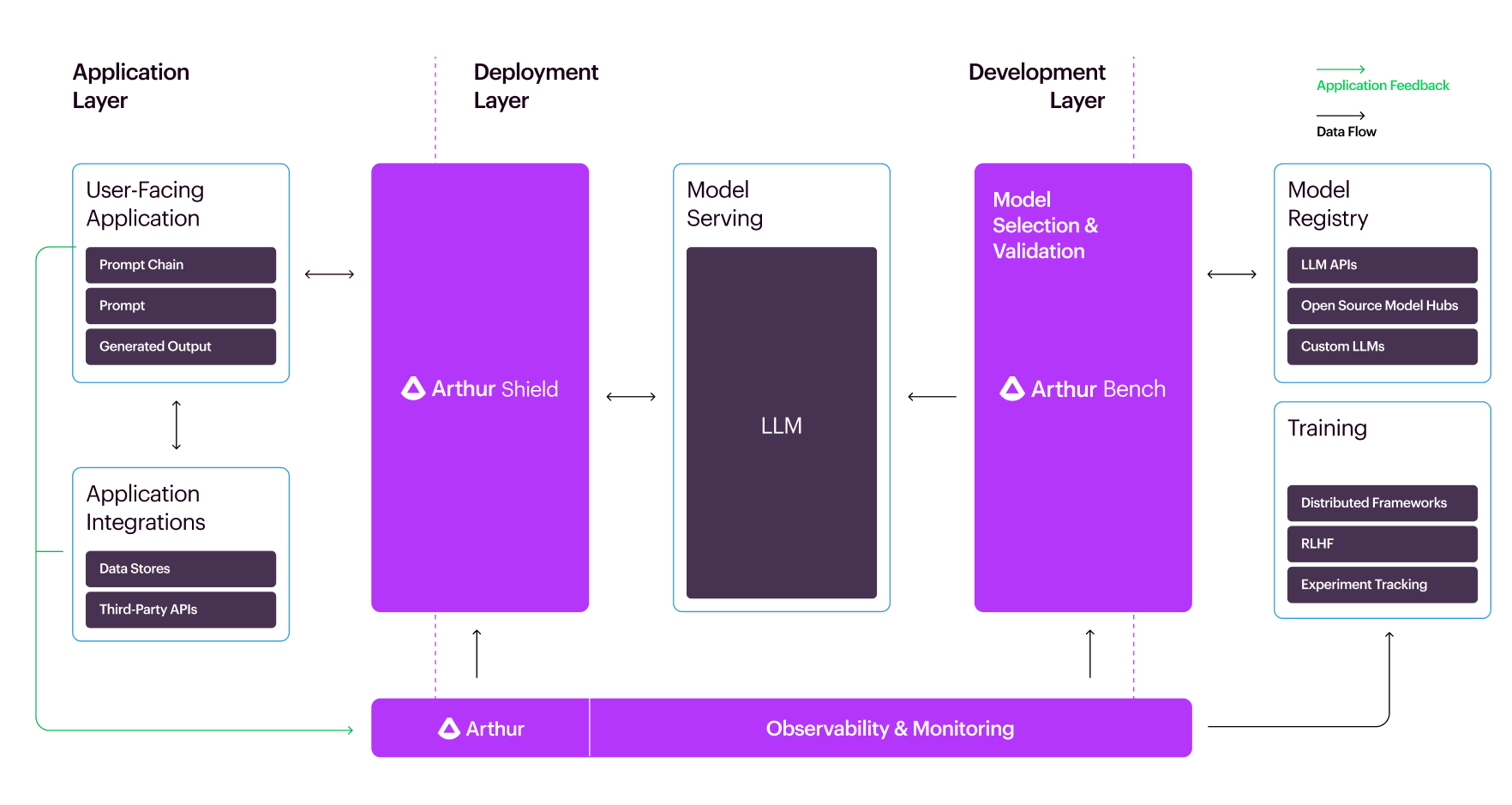

How does Arthur fit into my overall LLM Stack?

Practically, Arthur Shield sits in between your application and wherever your LLM is hosted, whether that is a public endpoint or self-hosted LLM within your own cloud environment. We offer a flexible deployment design that works with your ML infrastructure and can be deployed as SaaS or on-premise. Use this guide, Setting Up Shield, to understand how to get access quickly, depending on your organization's deployment requirements.

Arthur Shield is a key component within a suite of LLM products Arthur offers to help teams go from development to safeguarded deployment to active monitoring and continued improvement for LLM systems. Check out our additional LLM product offerings:

-

Arthur Bench: Arthur Bench is an open-source tool for evaluating LLMs for production use cases. Whether you are comparing different LLMs, considering different prompts, or testing generation hyperparameters like temperature and # tokens, Bench provides one touch point for all your LLM performance evaluation.

-

Arthur Observability for LLMs: Arthur's Observability Platform helps data scientists, ML engineers, product owners, and business leaders accelerate model operations at scale. Our platform monitors, measures, and improves machine learning models.

Looking for a turnkey solution to jumpstart your first LLM use case?

We know that many teams are early in their LLM journey and still figuring out ways to build valuable applications with best practices for safety right from the start. Arthur Chat is the fastest way to unlock value from LLMs, providing the first turnkey AI chat platform built on top of your enterprise documents and data, automatically protected by Arthur Shield, ensuring a safe deployment.

Jump ahead to Arthur Chat Overview to get started!

Updated about 1 month ago