Hallucination

Hallucinations are generated responses characterized as incorrect or unfaithful responses given a user input and source knowledge.

Types of Hallucinations

We can further classify hallucinations into two sub-categories:

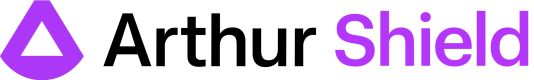

Intrinsic Hallucination

Given some source documents and a user prompt, the output provided by the LLM directly contradicts the information in the document.

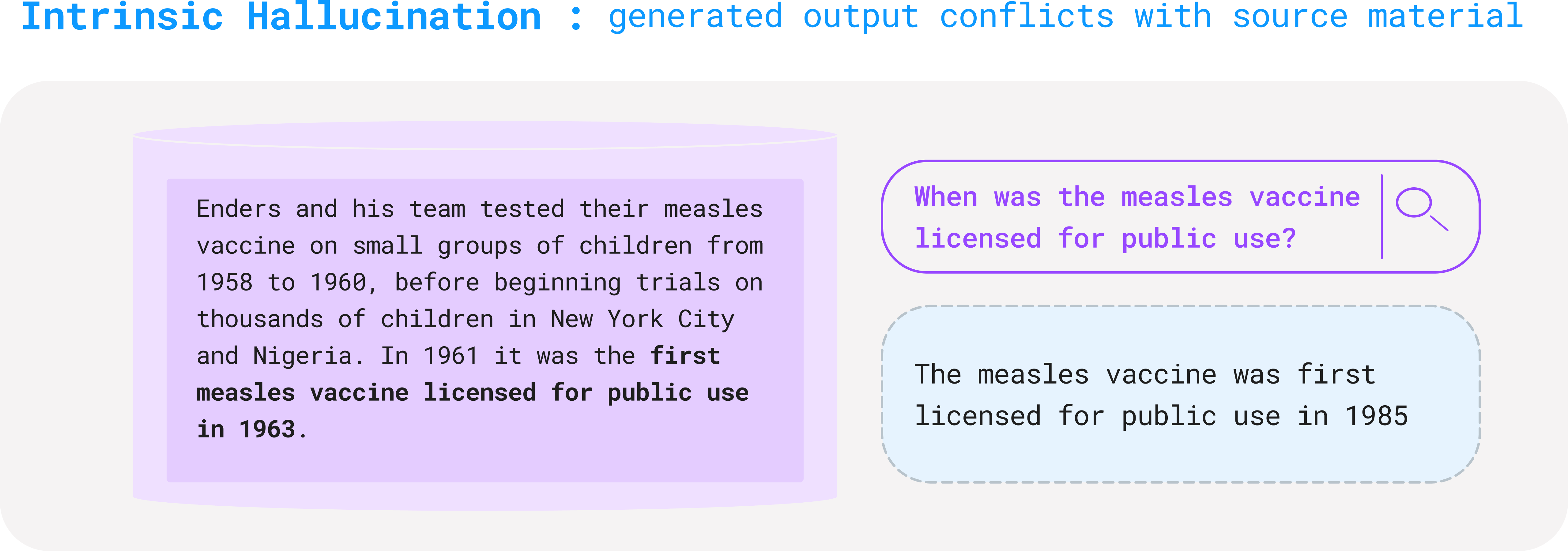

Extrinsic Hallucination

These types of hallucinations happen when the LLM output may or not be factually correct, but the information it has provided is not provided in the context provided in the user prompt.

The Shield Approach

The risk of a hallucination occurring in real-world use cases is misinforming users, which can result in incorrect business decisions. Specifically, when an application provides proprietary or domain-specific data to an LLM through augmented data retrieval, the model is more likely to interpret that data correctly.

Arthur Shield checks for intrinsic and extrinsic hallucinations, where an LLM’s output can be fact-checked against its context. Our current hallucination check utilizes a proprietary prompting technique with an LLM to evaluate whether a generated response should be flagged.

Claim-by-claim evaluation

Before using our LLM evaluator, we parse the provided response into chunks of text so that we can localize which chunk, if any, contains hallucinated information.

Once the evaluation is complete, we return a Fail result when any chunk of text contains a hallucination. We return details indicating the shield result for each chunk of text so you can see whether the hallucination is throughout the entire response, or whether it is localized to a single claim.

Detecting Claims

Not all chunks are passed to our LLM evaluator. We use our own classification model trained to detect when text contains a claim that needs evaluation. This classifier has been trained to return False on dialog like "Let me know if you have any further questions!", as well as responses that avoid making claims like "I'm sorry, I don't have information about that stock price in the reference documents". In contrast, the classifier has been trained to return True when an LLM is attempting to actually answer the user's query with the context.

If a chunk of text is not classified as a claim, then you will see it in your Shield response with the fields "valid": true, and "reason": "Not evaluated for hallucination."

Evaluating Claims

If a chunk of text in your response is classified by our model as a claim, then we pass it along to our LLM evaluator.

If the LLM evaluator finds no hallucination, then you will see it in your Shield response with the fields "valid": true, and "reason": "No hallucination detected!". Otherwise, if a chunk of text is classified as a claim, but the LLM evaluator detects a hallucination, then you will see it in your Shield response with the fields "valid": false, and a "reason" written by the evaluator.

Requirements

For this technique to work, both the response and relevant context for the response must be provided in the Validate Response endpoint.

| Prompt | Response | Context Needed? | |

|---|---|---|---|

| Hallucination | ✅ | ✅ |

Context is required in order for the Hallucination rule to run. If your application does not provide additional context to the LLM, please disable the Hallucination rule to avoid additional latency.

Benchmarks

The benchmark datasets we assembled contain samples from the following datasets.

- The Dolly dataset from Databricks

- This dataset was compiled by Databricks employees in early 2023 by prompting an LLM to respond to questions (while also providing a context). The original responses mostly contained claims all supported by their context, so we extended the dataset internally with examples of hallucinated responses (synthetically generated by

gpt-3.5-turbo-0314).

- This dataset was compiled by Databricks employees in early 2023 by prompting an LLM to respond to questions (while also providing a context). The original responses mostly contained claims all supported by their context, so we extended the dataset internally with examples of hallucinated responses (synthetically generated by

- The Wikibio GPT-3 Hallucination dataset from the University of Cambridge

- This dataset was compiled by prompting GPT-3 to write biographies, many of which contain hallucinated facts. Those hallucinations are visible on the public dataset on HuggingFace.

When running the benchmarks, we sample N = 50 rows from each dataset.

Each row from our benchmark datasets contains a context, an llm_response, and a binary_label for each claim in the LLM response (the binary_label for each row is a string of one or more labels, one label for each claim). The label False for a claim indicates that the claim is not supported by its context. It does NOT mean the claim is false on its own - claims that are true on their own are considered hallucinations by our rule if they lack evidence in their context.

The contexts can occasionally get rather long - we are only benchmarking LLMs with a context window of at least 16k tokens.

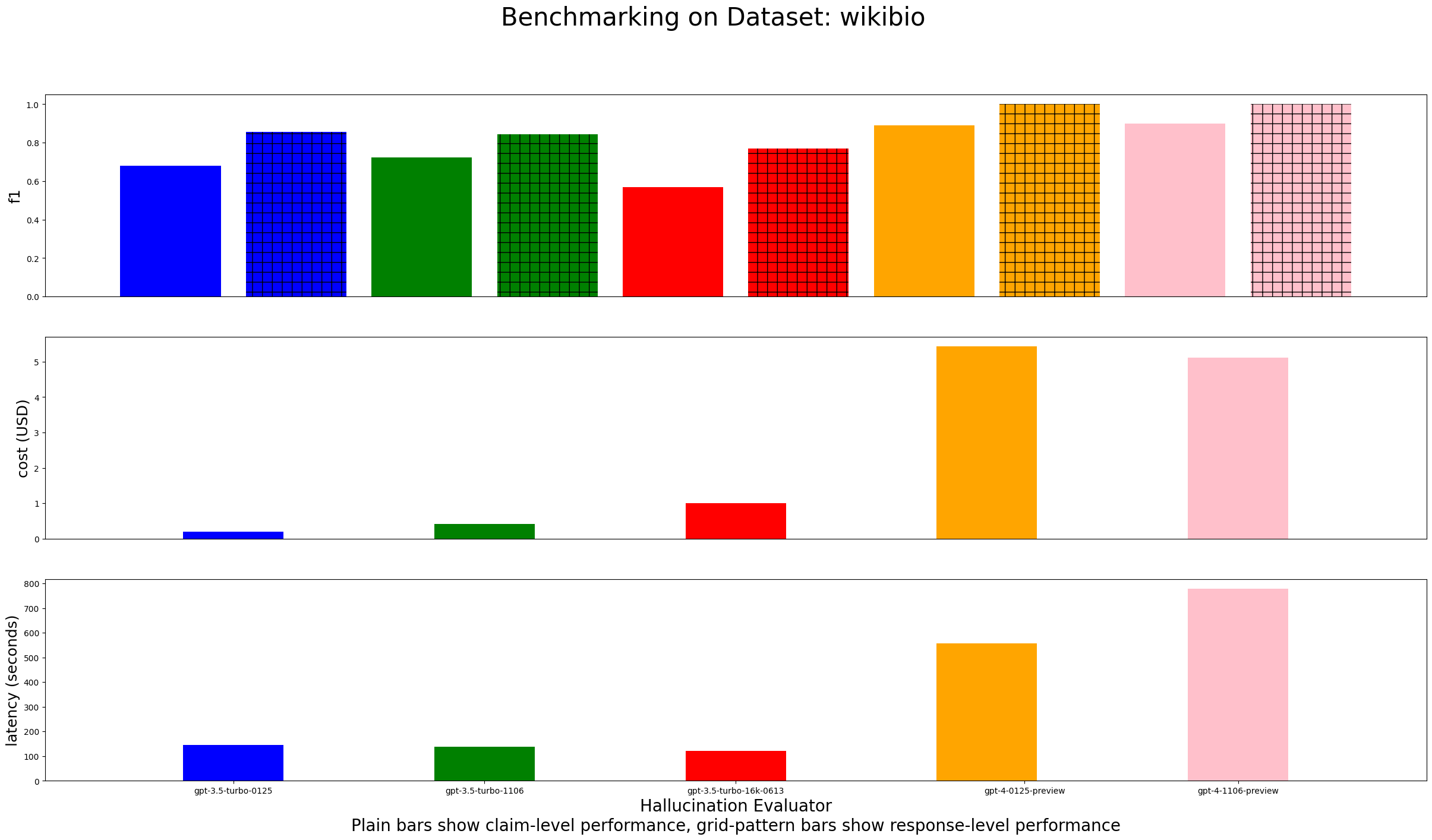

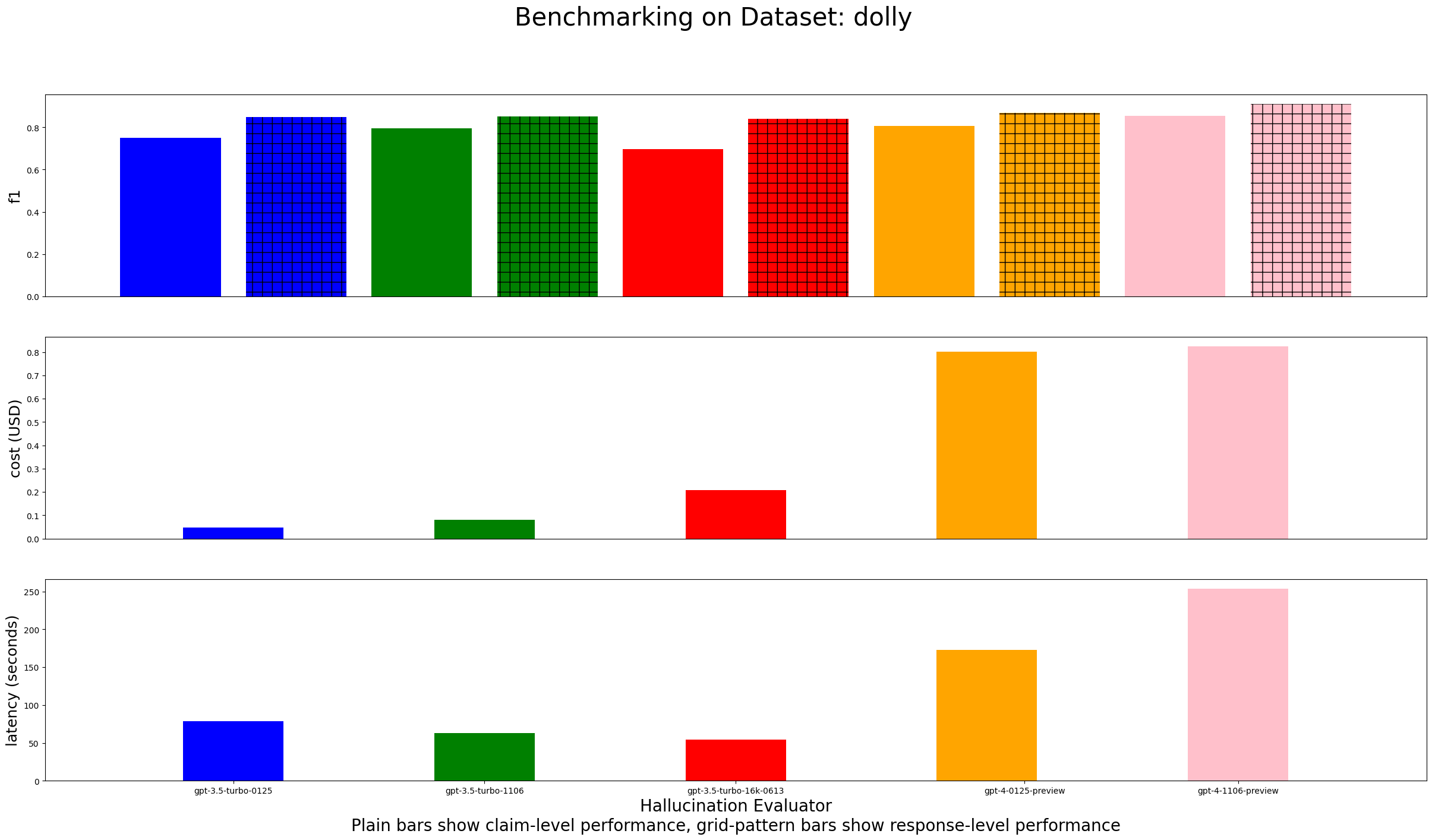

Response-level & Claim-level performance

We are reporting our hallucination detection performance on two different levels: response-level and claim-level.

For example, if an LLM response contains 3 sentences, the second of which contains a hallucination, then response-level detection would classify the response as a hallucination. In contrast, claim-level detection would classify the second sentence as a hallucination and the first/third sentences as supported.

Response level performance means just looking at the shield result - did the shield rule fail or not? Was there a hallucination somewhere in the LLM response? Perfect response-level performance does not require labelling individual claims within a response accurately - it only requires for the shield result to fail in correspondence with the presence of hallucination anywhere in an LLM response.

Claim level performance is more strict - which claims did the shield result label as valid or invalid? Are those the claims that are actually faithful to the context?

You can typically expect response level performance to be stronger than claim level performance.

Consult the Azure OpenAI Model documentation to see which models work best for your deployment.

Benchmark Data

Note on Cost & Latency: Numbers represent the total calculated for an entire benchmark set of N = 50. Cost is computed as the # of prompt & completion tokens used, multiplied by their respective prices. We only report cost & latency for the response-level portion of the table, since those metrics don't change whether one measures performance at the response level or at the claim level.

Note on TRUE/FALSE class for metrics: for the purpose of our metrics calculations for precision, recall, and F1, we consider the TRUE class of the task of hallucination detection to be the class of hallucinated claims (claims which were not supported by their corresponding context), and the FALSE class to be the class of supported claims (claims which were indeed supported by their context). This is despite the fact that, in our datasets themselves, we label the claims as True when they are supported by the context (AKA not a hallucination).

| Level | Dataset | Model | Cost (USD) | Latency (s) | Precision | Recall | F1 |

|---|---|---|---|---|---|---|---|

| response | wikibio | gpt-4-1106-preview | 5.11 | 779.15 | 1.0 | 1.0 | 1.0 |

| response | wikibio | gpt-4-0125-preview | 5.43 | 558.26 | 1.0 | 1.0 | 1.0 |

| response | wikibio | gpt-3.5-turbo-16k-0613 | 1.01 | 120.64 | 1.0 | 0.62 | 0.77 |

| response | wikibio | gpt-3.5-turbo-1106 | 0.42 | 139.16 | 1.0 | 0.73 | 0.84 |

| response | wikibio | gpt-3.5-turbo-0125 | 0.2 | 145.68 | 1.0 | 0.75 | 0.86 |

| response | dolly | gpt-4-1106-preview | 0.82 | 253.85 | 0.96 | 0.86 | 0.91 |

| response | dolly | gpt-4-0125-preview | 0.8 | 172.91 | 0.96 | 0.79 | 0.87 |

| response | dolly | gpt-3.5-turbo-16k-0613 | 0.21 | 54.74 | 1.0 | 0.72 | 0.84 |

| response | dolly | gpt-3.5-turbo-1106 | 0.08 | 62.94 | 0.92 | 0.79 | 0.85 |

| response | dolly | gpt-3.5-turbo-0125 | 0.05 | 78.35 | 0.83 | 0.86 | 0.85 |

| claim | wikibio | gpt-4-1106-preview | - | - | 0.88 | 0.92 | 0.9 |

| claim | wikibio | gpt-4-0125-preview | - | - | 0.88 | 0.9 | 0.89 |

| claim | wikibio | gpt-3.5-turbo-16k-0613 | - | - | 0.85 | 0.43 | 0.57 |

| claim | wikibio | gpt-3.5-turbo-1106 | - | - | 0.83 | 0.64 | 0.72 |

| claim | wikibio | gpt-3.5-turbo-0125 | - | - | 0.86 | 0.56 | 0.68 |

| claim | dolly | gpt-4-1106-preview | - | - | 0.97 | 0.76 | 0.85 |

| claim | dolly | gpt-4-0125-preview | - | - | 0.97 | 0.69 | 0.81 |

| claim | dolly | gpt-3.5-turbo-16k-0613 | - | - | 0.96 | 0.55 | 0.7 |

| claim | dolly | gpt-3.5-turbo-1106 | - | - | 0.94 | 0.69 | 0.79 |

| claim | dolly | gpt-3.5-turbo-0125 | - | - | 0.79 | 0.71 | 0.75 |

Required Rule Configurations

No additional configuration is required for the Hallucination rule. For more information on how to add or enable/disable the Hallucination rule by default or for a specific Task, please refer to our Rule Configuration Guide.

Updated 2 months ago