Rules Overview Guide

Arthur Shield is a dynamic and constantly evolving offering, so please check back for more checks and availabilities regularly. Currently, Arthur Shield supports rules for the following:

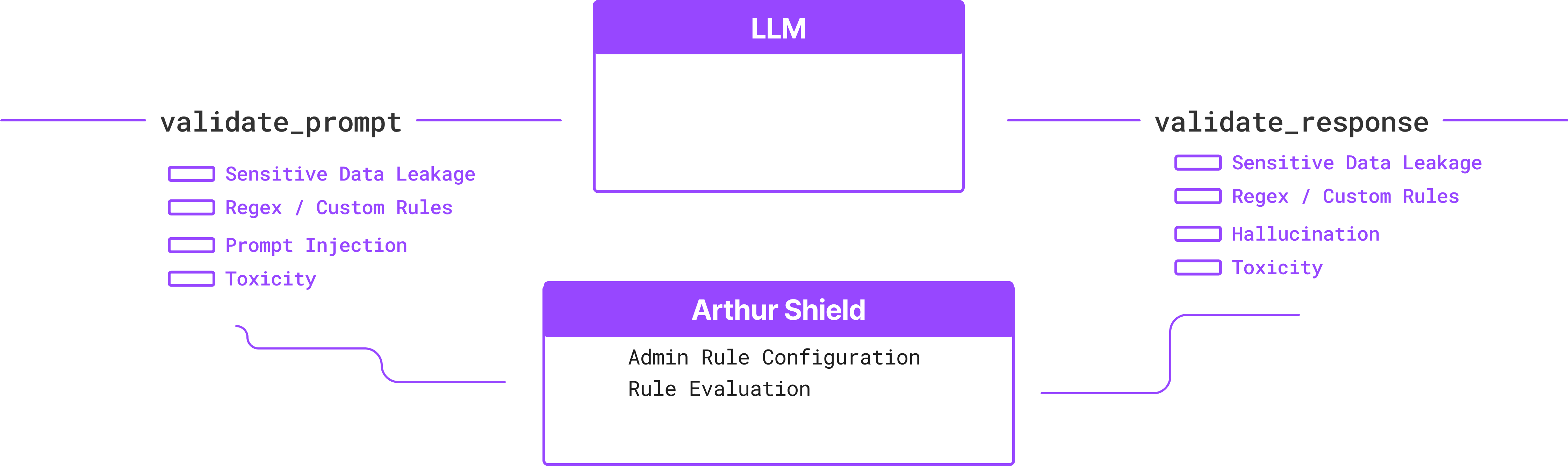

Rule Placement

As described in the Arthur Shield Quickstart, there are two places where rules can be checked in the Shield architecture. These are shown below:

Validate Prompt:

Consider validating the prompt as a true firewall, blocking anything suspicious. You can leverage the prompt validation capability to proactively block poor LLM behavior, as the input to LLMs influences their outputs (for example, toxic language in, toxic language out, or PII in, PII out). Additionally, teams that block calls with these inputs can save a call to their LLM model when validate_prompt triggers. Instead, respond to the user that this prompt has been blocked.

Validate Response:

Validating LLM responses allow teams to shield unwanted model outputs from users. While many out-of-the-box LLM solutions continuously improve their general solution offering, production applications often have more specialized requirements for LLM responses. Our most popular checks for response validation are use-case dependent, where teams configure their response rules for their specific task and domain.

Rule Configuration

Required Inputs for Rules

Depending on which rule you implement, different information needs to be passed into the Validate Prompt or Validate Response endpoints.

| Rule | Prompt | Response | Context Needed? |

|---|---|---|---|

| Prompt Injection | ✅ | ||

| Sensitive Data | ✅ | ✅ | |

| Hallucination | ✅ | ✅ | |

| PII Data Rule | ✅ | ✅ | |

| Custom Regex Rules | ✅ | ✅ | |

| Toxicity | ✅ | ✅ |

Required Rule Configurations

Below, we can see a quick overview table of all the additional information not passed through the API at the time of inference but instead defined within your created rule.

| Rule | What's required for configuring this rule? |

|---|---|

| Hallucination | N/A |

| Prompt Injection | N/A |

| PII | N/A |

| Sensitive Data | Examples of sensitive data leakage to avoid |

| Custom Rules | Provide regex or keyword-matching patterns |

| Toxicity | Threshold for classifying toxicity between 0 and 1, defaults to 0.5 |

Find more information and full configuration examples in the Rule Configuration Guide.

Updated 2 months ago